Disclaimer:

Please be aware that the content herein has not been peer reviewed. It consists of personal reflections, insights, and learnings of the contributor(s). It may not be exhaustive, nor does it aim to be authoritative knowledge.

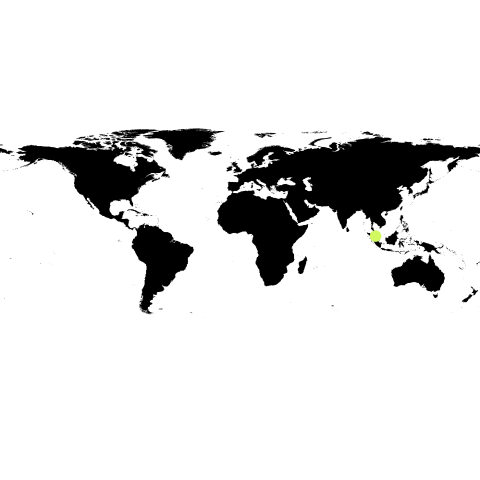

Animal Projects & Environmental Education Sdn. Bhd. (APE Malaysia) is an organization that that works collaboratively with stakeholders to develop sustainable conservation solutions. People and organizations can become involved in these projects either through APE’s volunteering program, education program or set up Corporate Responsibility projects. Whether used singly or combined, these methods are used in creative and unique ways to create distinct and dynamic programmes that highlight APE’s model of addressing environment + economics + people in everything it does.

15Life on land

15Life on land 17Partnerships for the goals

17Partnerships for the goals

Comments

Log in to add a comment or reply.